The final goal of the project is to predict the motion that a patient will perform during a rehabilitation task. However this is only possible if we know the position of the patients limb at all times. We therefore need a real-time and accurate measurement of the position of the patient’s limb.

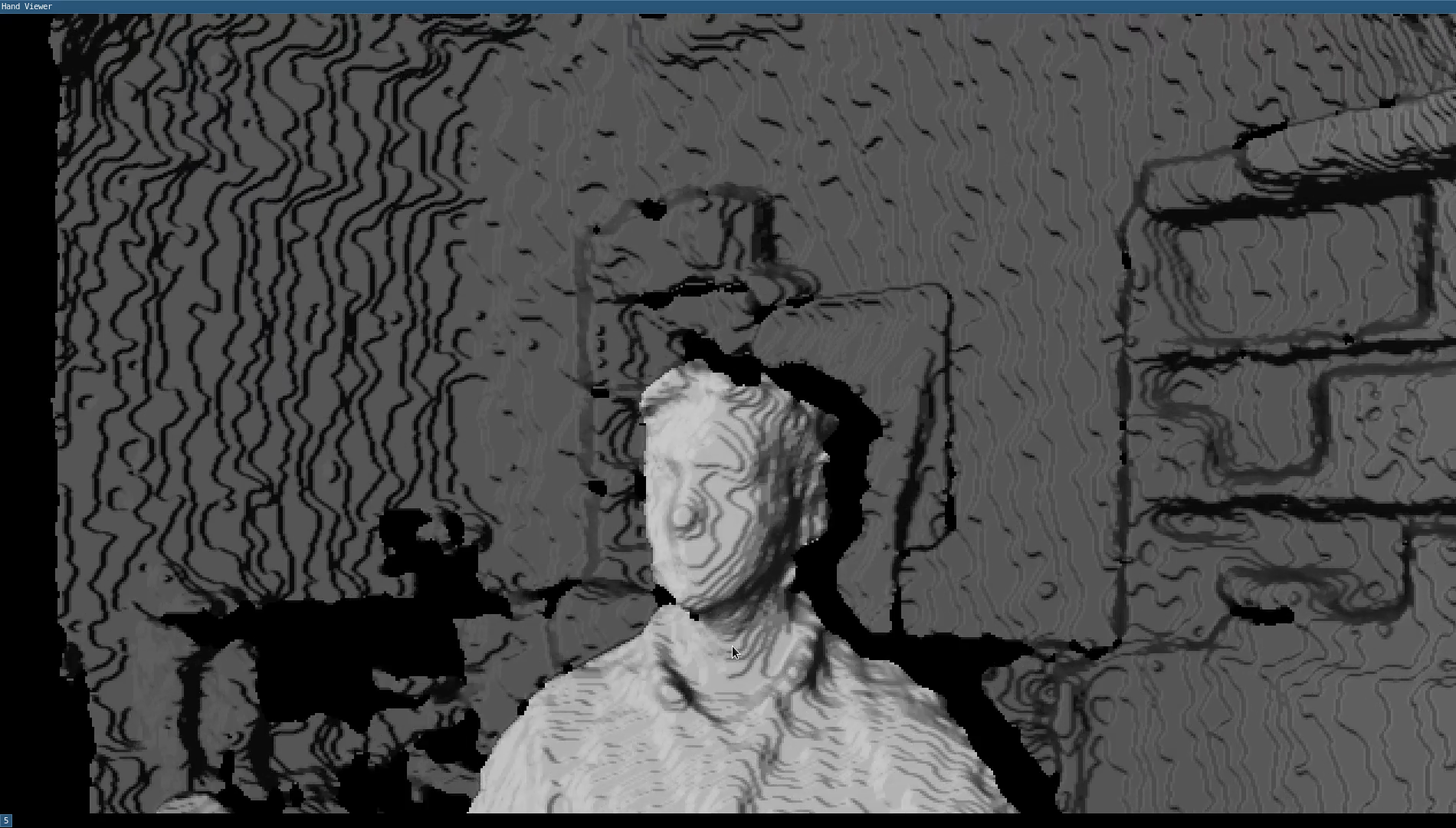

Our initial attempt is to perform this measurement using a ‘depth camera’, which is a device that does not only measure differenciations of colour, but also distances of various objects. This ability allows a camera to determine the position of objects in 3 dimensions, which is needed for our project.

Me, as captured by the depth camera!

Combined with the power of image processing, it is possible for such a camera these days to distinguish a human, segment his or her body features and extract information about the position and orientation of various body parts (usually the torso and the limbs). We are planning to use this approach because it requires no interaction with a patient (no sensors or markers attached on them), and no post-processing (which is common on the marker based tracking systems). This makes it possible to make the measurement and analysis real-time which is crucial for our project.

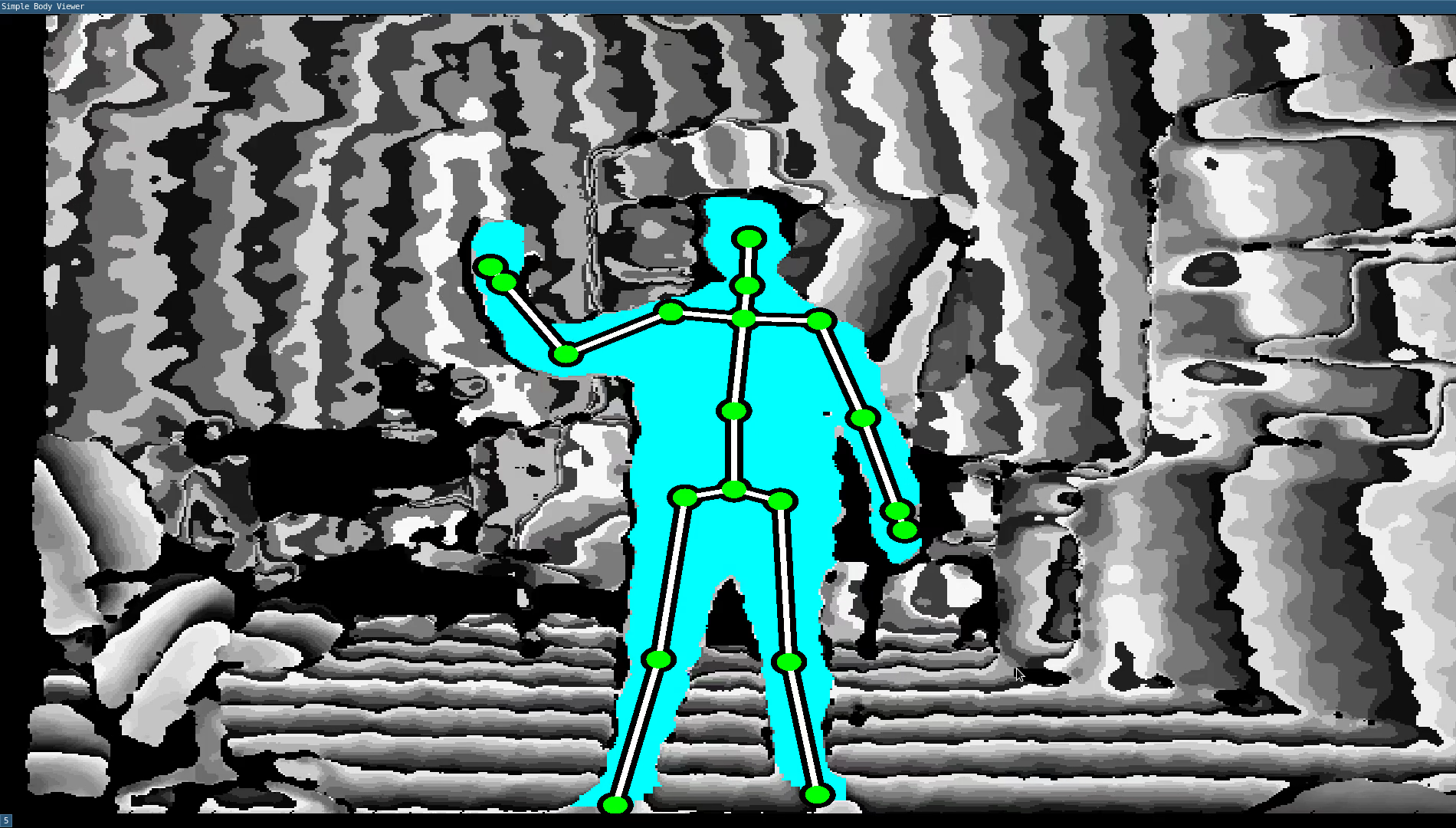

Waving to the camera!

I recently managed to finish the workflow for capturing the data from the camera and translating it points that I can visualise in Rviz, a powerful visualisation environment that is used in robotics. We have used a powerful Astra Pro camera, produced by Orbbec. The visualisation will not be necessary in the final project, however it might be motivating for the patients to see it. Below, you can see a short demonstration of how the camera is able to track my movements and translate it into position of markers. The markers are relating to my joint position, and are tracking the following joints/segment:

- Head & neck

- Top, middle, and lower spine

- Left and Right Shoulder, Elbow, and Hand

- Left and Right Hip, Kneew and Foot