Reinforcement learning and planning for nonlinear control applications

Planning methods for optimal control use a model of the system and the reward function to derive an optimal control strategy. Here we will consider in particular optimistic planning, a predictive approach that optimistically explores possible action sequences from the current state. Due to generality in the dynamics and objective functions that it can address, it has a wide range of potential applications to problems in nonlinear control. Reinforcement learning methods preserve this generality, and can additionally handle problems in which the model and even the reward function are unknown, and a solution must be learned from data.

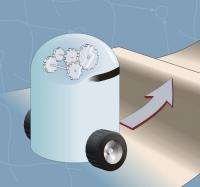

In this project the student will work either on fundamental developments in reinforcement learning and planning, on their real-time application to nonlinear control, or a combination of the two. Fundamental directions include e.g. novel planning algorithms for switching, hybrid, and continuous-input systems, analyzing the near-optimality of these methods, as well as the analysis of the stability properties of the optimal or near-optimal solutions. The application axis includes real-time results for the control of some nonlinear systems available in our lab, such as the Quanser rotational inverted pendulum, the Cyton Gamma robotic arm, or Parrot Mambo quadcopters. We will start with existing real-time control implementations; the movie below shows some existing results.

This project is suitable for students who are motivated and able to invest themselves fully. Complementary skillsets are required, so we are looking both for people who are good at C/C++ and Matlab programming, as well as for people who enjoy more analyical, math challenges.