The project is funded under the PTE technology transfer program of the Romanian Authority for Scientific Research, via UEFISCDI (project number PN-IV-P7-7.1-PTE-2024-0105, contract number 43PTE from 05/05/20225) with the title “Metode de fuziune bazate pe AI pentru camere”. It takes place at the Automation Department of the Technical University of Cluj-Napoca, Romania, for a duration of 2 years, with a total budget of 300000 EUR, in collaboration with Tvarita SRL.

Contact:

- UTCN (CO): Levente.Tamas@aut.utcluj.ro, tel: 0264401586, Str. Memorandumului 28, Cluj-Napoca, Ro.

- Tvarita (P1): Sergiu Daraban, email: sergiu.darabant@ubbcluj.ro

Abstract

Spatial perception and reasoning are becoming essential in the era of Industry 4.0, including logistics and remote assistance applications. The recent developments of more powerful AI accelerators integrated into or alongside embedded processors are enabling a paradigm shift in environmental perception systems. There are several benefits to this approach, including more self-capable machines, reduced bandwidth requirements for cloud connectivity, and faster, more accurate decision-making. At the edge node, the system partitioning is changing by pushing data processing closer to the decision-making system. Some of these preprocessing steps include the semantic scene parsing based on object recognition and 6DoF pose estimation.

For the reasons above, Tvarita has developed an innovative 3D camera system that includes 3D and, more recently, a 2D vision sensor, along with an AI-capable processor featuring high-speed communication interfaces. To enable the 3D smart camera system to be successfully integrated into all these applications, Tvarita needs configurable and redeployable computer vision algorithms that can maximise the information provided by the 3D and 2D cameras with embedded processors.

Driven by the current needs of Tvarita and relying on their expertise in machine vision, researchers at the academic partner UTCluj will work on the following major objectives: (i) developing high-performance algorithms for 3D and 2D sensor fusion, (ii) extending the already available AI-based 3D algorithms.

Objectives

To address the challenges identified above, this project focuses on the following objectives:

O1: Development of specific algorithms for 2D-3D data fusion for the new generation of cameras from the industrial partner Tvarita Romania.

O2: Optimal representation for hybrid data flow in client-server applications based on advanced representation techniques using GCN and PVCNN models.

O3: Providing a set of client-oriented use cases for the new generation of hybrid cameras from Tvarita.

Planned project activities and deliverables

Phase 1: Fused data processing embedded nodes

Deliverables: 2D-3D cloud processing algorithms, publications

Phase 2: Heterogeneous 2D-3D data modalities

Deliverables: Object recognition algorithms, publications

Phase 3: Application-specific demos and tech transfer

Deliverables: Exploiting results in real conditions, publications

Outcomes

Phase 1: Fused data processing embedded nodes

The activities in this stage focused on consolidating an experimental framework for stereo image processing and accurate disparity estimation, as a foundation for the efficient use of a 3D camera in autonomous systems (e.g., robots) capable of navigating dynamic environments.

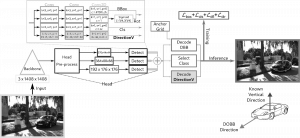

Thus, using techniques based on artificial intelligence, the orientations of objects

in a scene, as can be seen in the figure below:

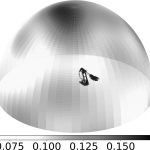

In a related activity, the optimal positions for observing the configuration of a robot arm were estimated, with the estimated results represented by a colored hemisphere indicating the optimal observation locations using a 2D camera:

Figure 3. Hemisphere with the performance of 112 cameras in the simulator for detecting the height of the end effector of a robotic arm, cameras placed equidistantly in rings of 12 cameras, each camera at 30° around the base of the arm, 9 rings, one ring every 10° from 0° to azimuth, performance represented in MSE

Publications: